PlAwAnSaI

Administrator

- AWS Systems Manager requires an IAM role for EC2 instances that it manages, to perform actions on behalf. This IAM role is referred to as an intance profile. If an instance is not managed by Systems Manager, one likely reason is that the instance does not have an instance profile, or the instance profile does not have the necessary permissions to allow Systems Manager to manage the instance.

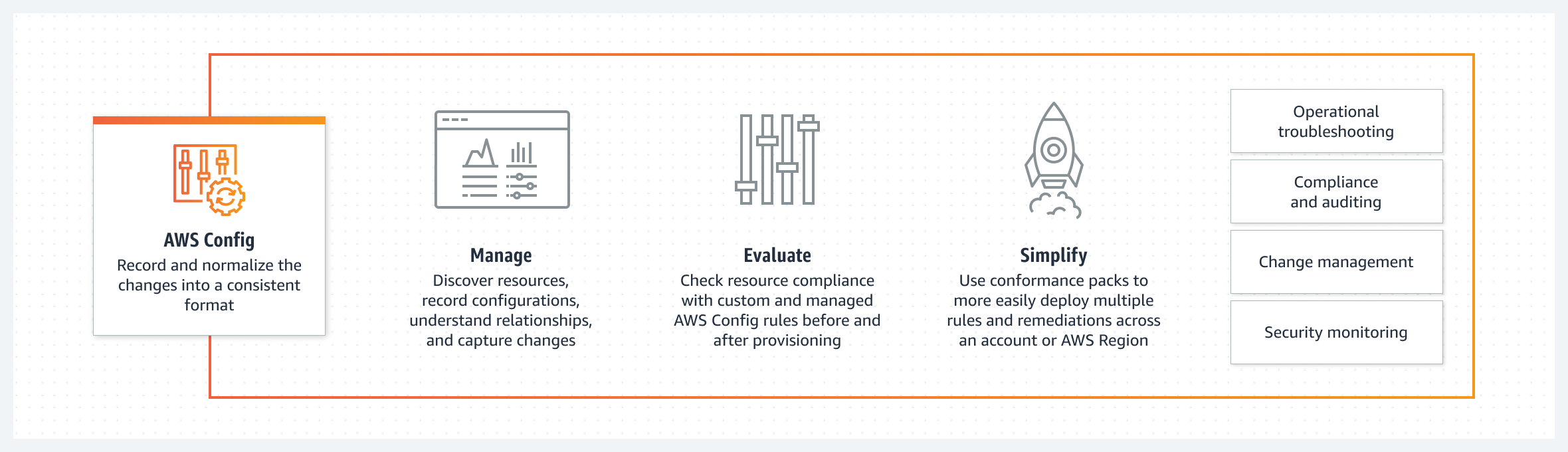

- A company has a new requirement stating that all resources in AWS must be tagged according to a set policy.

AWS Config service should be used to enforce and continually identify all resources that are not in compliance with the policy.

- A company's static website hosted on Amazon S3 was launched recently and is being used by tens of thousands of users. Subsequently, website users are experiencing 503 service unavailable errors.

These errors occurring because The request rate to Amazon S3 is too high.

- A SysOps Admin needs to receive an email whenever critical, production Amazon EC2 instances reach 80% CPU utilization.

This can be achieved by Create an Amazon CloudWatch alarm and configure an Amazon SNS notification.

CloudWatch Events is used for state changes not metric breaches.

- A SysOps admin is responsible for managing a company's cloud infrastructure with AWS CloudFormation. The SysOps admin needs to create a single resource that consist of multiple AWS services. The resource must support creation and deletion through the CloudFormation console.

To meet these requirements the SysOps admin should create CloudFormation Custom::MyCustomType.

Custom resources enable to write custom provisioning logic in templates that AWS CloudFormation runs anytime create, update (if changed the custom resource), or delete stacks. For example, might want to include resources that aren't available as AWS CloudFormation resource types. Can include those resources by using custom resources.

That way can still manage all related resources in a single stack.

- A SysOps Admin manages a fleet of Amazon EC2 instances running a distribution of Linux. The OSs are patched on a schedule using AWS Systems Manager Patch Manager. Users of the application have complained about poor response times when the systems are being patched.

To ensure patches are deployed automatically with MINIMAL customer impact is Configure the maintenance window to patch 10% of the instances in the patch group at a time.

- A global gaming company is preparing to launch a new game on AWS. The game runs in multiple AWS Regions on a fleet of Amazon EC2 instances. The instances are in an Auto Scaling group behind Application Load Balancer (ALB) in each Region. The company plans to use Amazon Route 53 for DNS services. The DNS configuration must direct users to the Region that is closest to them and must provide automated failover.

To configure Route 53 to meet these requirements a SysOps admin should Create Amazon CloudWatch alarms that monitor the health of the ALB in each Region. Configure Route 53 DNS failover by using a health check that monitors the alarms. And Configure Route 53 geoproximity routing Specify the Regions that are used for the infrastructure.

Monitoring the health of the EC2 instances is not sufficient to provide failover as the EC2 instances are in an Auto Scaling group and instances can be added or removed dynamically.

Monitoring the private IP address of an EC2 instance is not sufficient to determine the health of the infrastructure, as the instance may still be running but the application or service on the instance may be unhealthy.

Simple routing does not take into account geographic proximity.

- A company has a workload that is sending log data to Amazon CloudWatch Logs. One of the fields includes a measure of application latency. A SysOps admin needs to monitor the p90 statistic of this field over time.

To meet this requirement the SysOps admin should Create a metric filter on the log data.

- A SysOps admin must configure a resilient tier of Amazon EC2 instances for a High Performance Computing (HPC) applicaton. The HPC application requires minum latency between nodes.

To meet these requirements the SysOps admin should Place the EC2 instances in an Auto Scaling group within a single subnet and Launch the EC2 instances into a cluster placement group.

- A company has a web application with a DB tier that consists of an Amazon EC2 instance that runs MySQL. A SysOps admin needs to minimize potential data loss and the time that is required to recover in the event of a DB failure.

The MOST operationally efficient solution that meets these requirements is Use Amazon Data Lifecycle Manager (DLM) to take a snapshot of the Amazon Elastic Block Store (EBS) volume every hour. In the event of an EC2 instance failure, restore the EBS volume from a snapshot.

- If the Dev are provided with full admin access, then the only way to ensure compliance with the corporate policy is to use an AWS Organizations Service Control Policies (SCPs) to restrict the API actions relating to use of the specific restricted services.

- A company has set up an IPSec tunnel between its AWS environment and its on-premises data center. The tunnel is reporting as UP, but the Amazon EC2 instances are not able to ping any on-premises resources.

To resolve this issue a SysOps admin should Create a new inbound rule on the EC2 instances' security groups to allow ICMP traffic from the on-premises CIDR.

- If the users have reported high latency and connection instability of the application in another region, we can Create an accelerator in AWS Global Accelerator and update the DNS record to improve the availability and performance. It provides static IP addresses that provide a fixed entry point to applications and eliminate the complexity of managing specific IP addresses for different AWS Regions and AZ.

It always routes user traffic to the optimal endpoint based on performance, reacting instantly to changes in application health, user's location, and policies that configure.

CloudFront cannot be used for the SFTP protocol.

- A company monitors its account activity using AWS CloudTrail, and is concerned that some log files are being tampered with after the logs have been delivered to the account's Amazon S3 bucket.

Moving forward, the SysOps Admin confirm that the log files have not been modified after being delivered to the S3 bucket by Enable log file integrity validation and use digest files to verify the hash value of the log file.

- A company uses several large Chef recipes to automate the configuration of Virtual Machines (VMs) in its data center. A SysOps admin is migrating this workload to Amazon EC2 instances on AWS and must run the existing Chef recipes.

Solution will meet these requirements MOST cost-effectively is Set up AWS OpsWorks for Chef Automate. Migrate the existing recipes. Modify the EC2 instance user data to connect to Chef.

- A company needs to ensure strict adherence to a budget for 25 applications deployed on AWS. Separate teams are responsible for storage, compute, and DB costs. A SysOps admin must implement an automated solution to alert each team when their projected spend will exceed a quarterly amount that has been set by the finance department. The solution cannot incur additional compute, storage, or DB costs.

Solution will meet these requirements is Use AWS Budgets to create a cost budget for each team, filtering by the services they own. Specify the budget amount defined by the finance department along with a forecasted cost threshold. Enter the appropriate email recipients for each budget.

Last edited: