PlAwAnSaI

Administrator

Work Hard. Have Fun. Make History.

Index:

Index:

- Tech Fundamentals

- AWS S3

- Azure Fundamentals

- VPC Basics

- Containers, ECS & ECR

- EC2

- Infrastructure as Code (IaC)

- DNS & CDN

- Lab#1: Migrate from VMs on-prem to AWS EC2 & RDS

- Lab#2: Web App Architecture Evolution from Single EC2 to Elastic

- เรียนรู้พื้นฐานของ AWS Cloud:

- Cloud Computing คืออะไร?

- AWS คืออะไร?

- ประเภทของ Cloud Computing

- Cloud Computing กับ AWS

- ภาพรวมความรู้พื้นฐานของ AWS

- แนวคิดหลักของ AWS Fundamentals

-

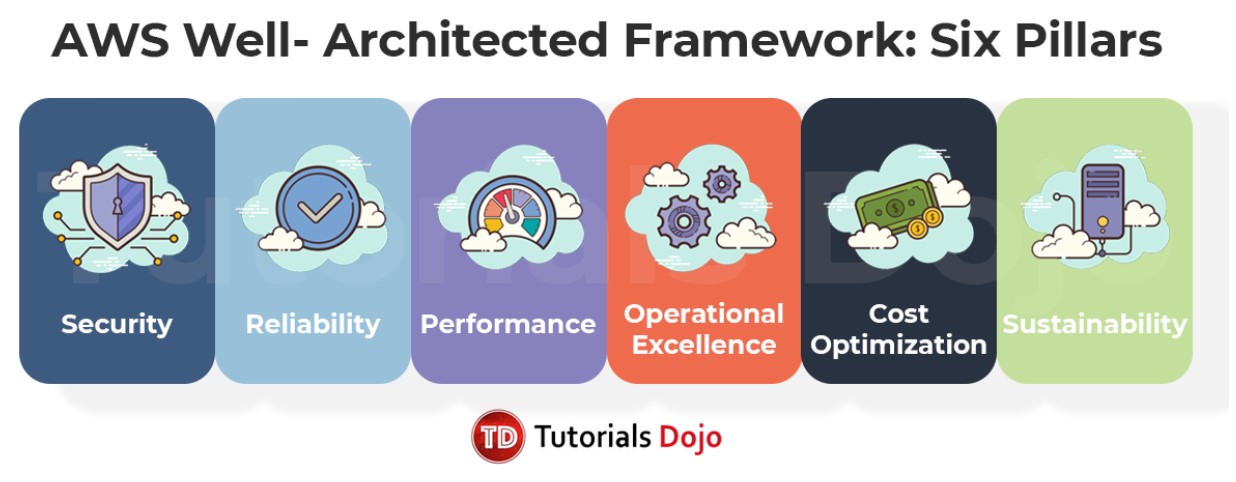

- ความเป็นเลิศในการปฏิบัติงาน: การดำเนินงานเป็นระบบอัตโนมัติ

IaC สามารถใช้ Provision Service โดยอัตโนมัติโดยใช้เครื่องมือและกระบวนการเดียวกับที่ใช้สำหรับ Code ในปัจจุบัน

Observability ใช้รวบรวม, วิเคราะห์ และดำเนินการกับ Metric เพื่อปรับปรุงการดำเนินงานอย่างต่อเนื่อง - ความปลอดภัย: Zero Trust

IAM ตามหลักการของ Least Privilege (ให้สิทธิ์การเข้าถึงได้ในระดับที่จำเป็น)

AWS Network Security กับการออกแบบระบบความมั่นคงปลอดภัยแบบ Defense in Depth คือแนวคิดเรื่องการออกแบบระบบรักษาความมั่นคงปลอดภัยแบบหลายชั้น กล่าวคือ ยิ่งมี Technique หรือกระบวนการในการตรวจจับภัยคุกคามมากเท่าไหร่ ยิ่งทำให้มีโอกาสตรวจจับเจอภัยคุกคามสูงมากขึ้นเท่านั้น ส่งผลให้ป้องกัน Hacker ได้ก่อนที่จะเข้ามาแทรกซึมในระบบ

Data Encryption ถูกนำไปใช้ทั้งกับ ข้อมูลที่ส่งระหว่างระบบ และภายในระบบ - ความน่าเชื่อถือ: Blast Radius (รัศมีของการเกิดผลกระทบ)

Fault Isolation Zones เพื่อจำกัด Blast Radius

Limits เพื่อหลีกเลี่ยงการหยุดชะงักของการบริการ - ประสิทธิภาพของการปฏิบัติงาน: ปฏิบัติต่อ Server แบบปศุสัตว์แทนที่จะเป็นสัตว์เลี้ยง

เลือกบริการที่เหมาะสมรวมถึงการกำหนดค่า ตามเป้าหมายประสิทธิภาพ

ปรับขนาดบริการได้สองแบบ Vertical และ Horizontal - การเพิ่มประสิทธิภาพต้นทุน:

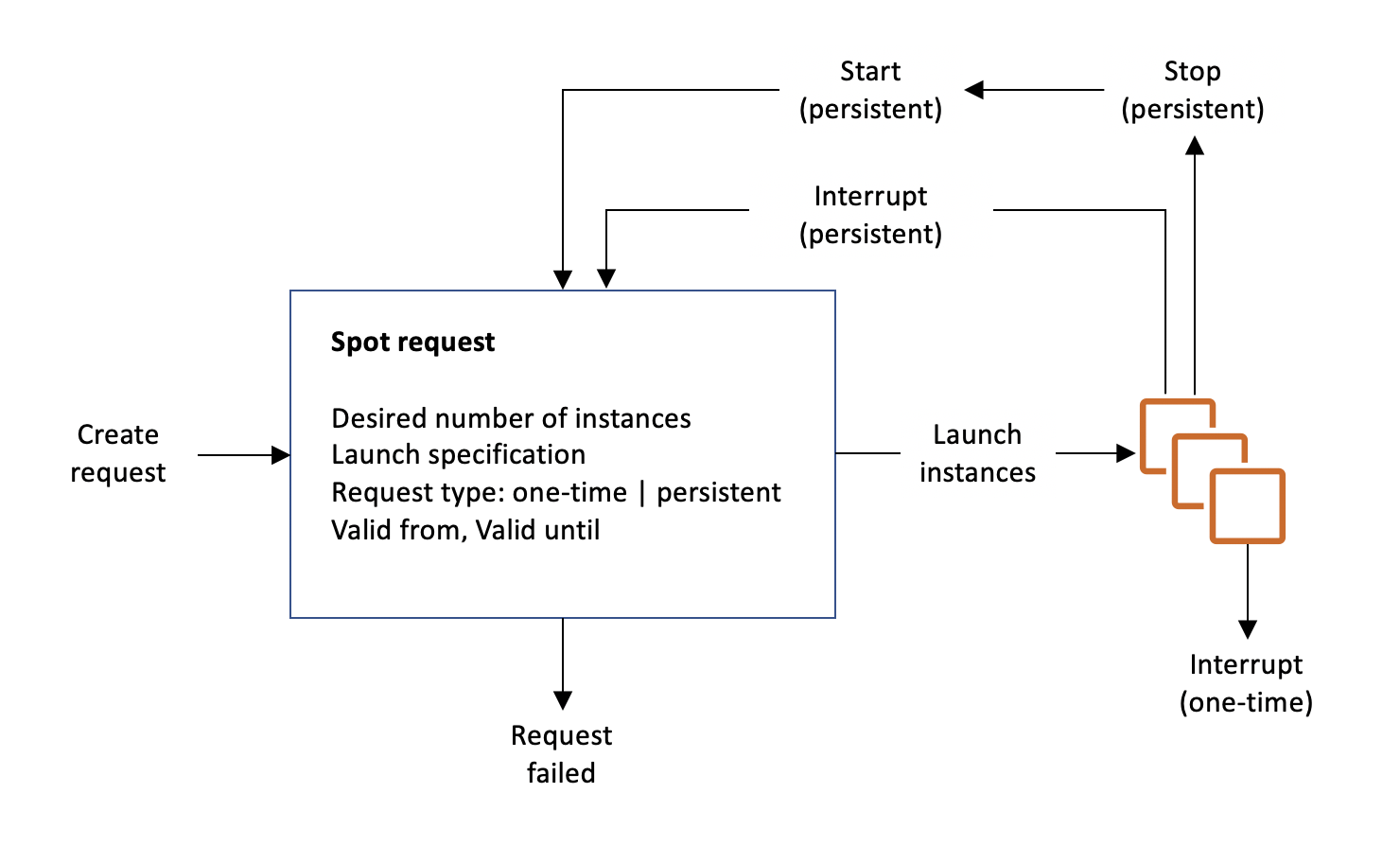

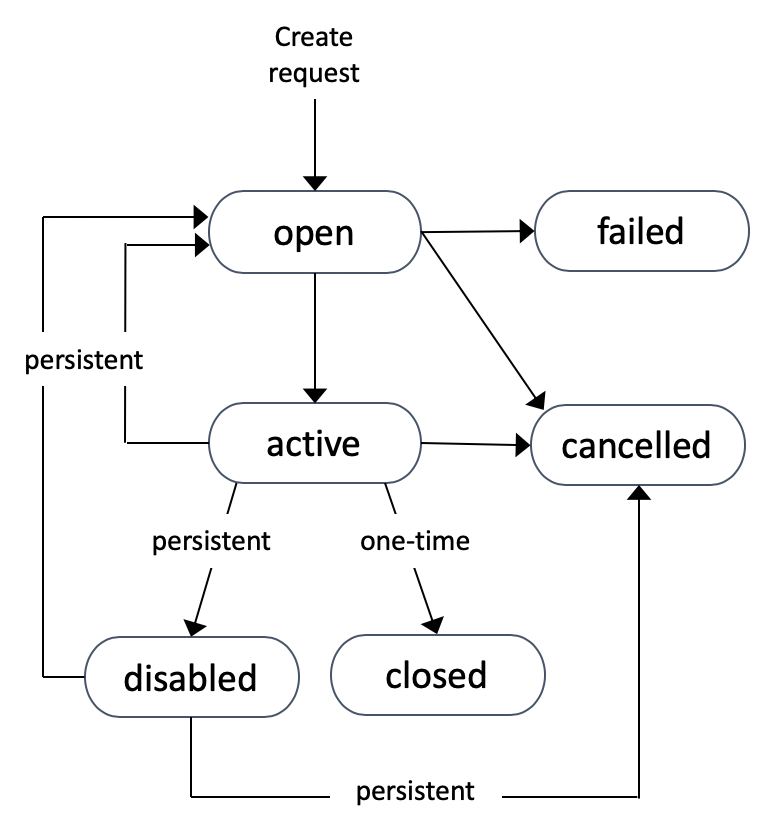

Model การใช้จ่ายจะเน้น OpEx จะมี Technique เช่น การปรับขนาดที่เหมาะสม, Technology ไร้ Server, การจอง, และ Spot Instance

การตรวจสอบติดตามและเพิ่มประสิทธิภาพงบประมาณโดยใช้บริการ เช่น Cost Explorer, Tags, และ Budgets

- ความเป็นเลิศในการปฏิบัติงาน: การดำเนินงานเป็นระบบอัตโนมัติ

- ภาพรวมของ AWS

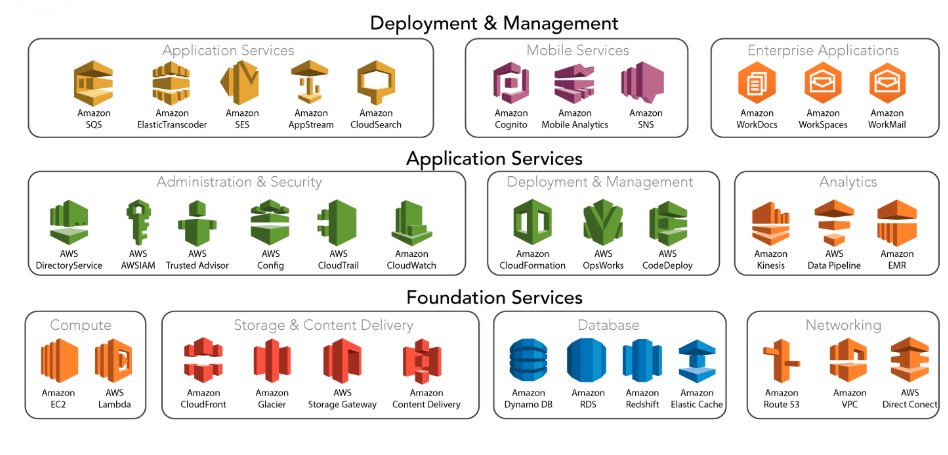

AWS มีส่วนประกอบพื้นฐานที่สามารถประกอบได้อย่างรวดเร็วเพื่อรองรับภาระงานแทบทุกประเภท ด้วย AWS จะพบชุดบริการที่พร้อมใช้งานสูงที่ออกแบบมาเพื่อทำงานร่วมกัน ช่วยให้สร้าง Application ที่สามารถปรับขนาดและซับซ้อนได้

สามารถเข้าถึงพื้นที่เก็บข้อมูลที่ทนทานสูง, การประมวลผลต้นทุนต่ำ, ฐานข้อมูลประสิทธิภาพสูง, เครื่องมือการจัดการ และอื่นๆ ทั้งหมดนี้มีให้โดยไม่มีค่าใช้จ่ายล่วงหน้า จ่ายเฉพาะสิ่งที่ใช้เท่านั้น บริการเหล่านี้ช่วยให้องค์กรทำงานได้เร็วขึ้น, ลดค่าใช้จ่ายด้าน IT และยังสามารถปรับขนาดได้ตามต้องการ AWS ได้รับความไว้วางใจจากองค์กรที่ใหญ่ที่สุด และ Start-up ที่มาแรงที่สุด ในการขับเคลื่อนปริมาณงานที่หลากหลาย, รวมถึง Application บน Web และ Mobile, การพัฒนา Game, การประมวลผลข้อมูลและคลังสินค้า, การจัดเก็บข้อมูล และอื่นๆ อีกมากมาย - AWS Global Infrastructure

- ศัพท์ AWS

- Amazon Web Service (AWS): Amazon Web Service หรือ AWS คือหนึ่งในผู้ให้บริการด้าน Cloud Computing โดย Website Amazon โดย AWS มีบริการรองรับสามกลุ่มใหญ่ๆ ด้วยกันคือบริการด้าน IaaS, PaaS และ SaaS ตัวอย่าง Product ที่เป็นที่รู้กันดีของ AWS เช่น Amazon EC2, Amazon Elastic Beanstalk, และ Amazon S3.

- Auto Scaling (AS): คือ บริการที่สามารถปรับเปลี่ยนทรัพยากรได้ตามที่กําหนดอย่างอัตโนมัติ ซึ่งจะเหมาะสมกับสถานการณ์ที่การใช้งานต้องการทรัพยากรอย่างสูงมากและเร่งด่วนในช่วงเวลาใดเวลาหนึ่ง ซึ่งจะช่วยให้การบริหารจัดการทรัพยากรมีประสิทธิภาพมาก (บริการต้องร้องขอเป็นกรณีพิเศษ)

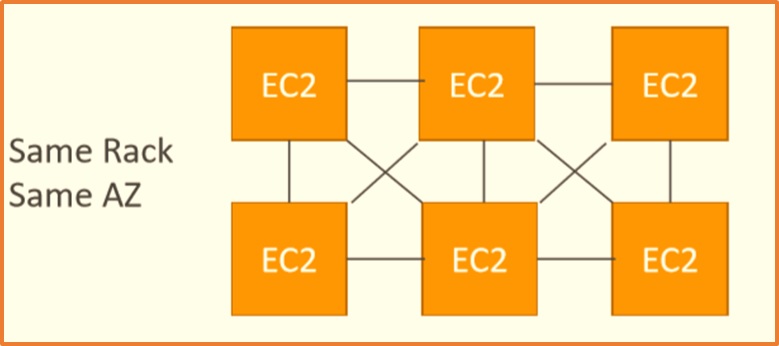

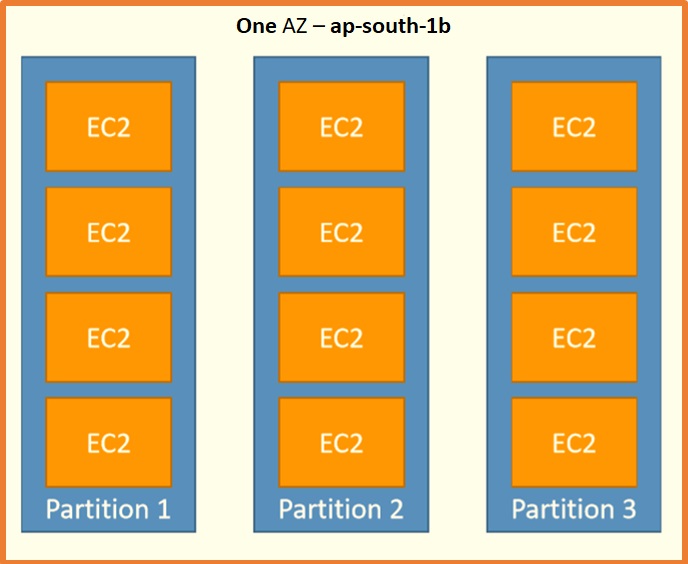

- Availability zones: เปรียบเสมือนศูนย์ข้อมูลที่ให้บริการทรัพยากร Computer ถ้าหากว่า Availability Zone แห่งหนึ่งมีปัญหา จะไม่ส่งผลให้ Availability Zone อื่นๆ มีปัญหาตามไปด้วย

- Cloud Service Provider (CSP): คือบริษัทผู้ให้บริการ Cloud Computing ทั้งในส่วนของ PaaS, IaaS หรือ SaaS

- Container: คือ Technology ที่เปรียบเสมือนหีบห่อซึ่งสามารถบรรจุ พวก Software, Program หรือ Application ต่างๆ เพื่อนำไปใช้งานบน Server ที่ไหนก็ได้ โดยจะช่วยลดขั้นตอนในการลง Program หรือ Tools ต่างๆ

- Content Delivery Network (CDN): คือระบบเครือข่ายของเครื่อง Server ขนาดใหญ่ ที่เชื่อมต่อกันทั่วโลกผ่านทาง Internet ทำหน้าส่งข้อมูลให้ไปถึงผู้รับปลายทางให้เร็วที่สุด มีประสิทธิภาพและพร้อมให้ผู้ชมเข้าถึงข้อมูลได้ตลอดเวลา

- Elastic Block Store (EBS): คือ การจัดการพื้นที่จัดเก็บ Block ประสิทธิภาพสูง ใช้ในการจัดเก็บข้อมูลที่มีการส่งข้อมูลและการทําธุรกรรมในปริมาณงานที่มีการประมวลผลมาก

- Elastic Container Service (ECS): เป็นบริการจัดการ Container ประสิทธิภาพสูง สามารถปรับขนาดให้รองรับ Container Docker ได้อย่างง่ายได้ ซึ่งช่วยให้สามารถ Run และปรับขนาด Application ที่มี Container ได้ตามต้องการ

- Elastic IP: คือ IP address ที่มีลักษณะ Static ทั้งในส่วนของ Private และ Public IP ซึ่งผู้ใช้งานสามารถเลือกเพื่อเชื่อมต่อไปยัง Internet หรือส่งข้อมูลกันผ่านในระบบ Cloud ได้ ส่งผลให้เกิดความคล่องตัวในการใช้งาน

- Object Storage (S3): คือ Cloud Storage หรือ Object Store ที่ถูกสร้างขึ้นมาเพื่อจัดเก็บข้อมูลใดๆ ที่สามารถนําข้อมูลมาวิเคราะห์ และสามารถเข้าถึงข้อมูลนี้ได้จากทุกที่ ไม่ว่าจะเก็บ Website, Mobile App หรือพวก Data ต่างๆ ที่ต้องการ

- Resource: ปัจจัยหรือทรัพยากรที่เกี่ยวข้องกับระบบ Computer ที่จำกัดตามการประมวลผลหรือเกี่ยวข้องกับการแก้ไขปัญหาตามโจทย์ที่ความต้องการของผู้ใช้ได้ระบุไว้

- Virtual Private Cloud (VPC): คือ ระบบที่ช่วยให้ผู้ใช้งานสามารถสร้าง Virtual Networks สําหรับแต่ละระบบแยกออกจากกัน และบริหารจัดการได้อย่างสะดวก ซึ่งจะส่งผลให้การออกแบบ Network และใช้ทรัพยากรบน Cloud ปลอดภัยมากขึ้น

Last edited: